Invited Talks

Title: People, Decisions, and Cognition: On Deeper Engagements with Machine Learning

June 22, 2014 at 8:30am

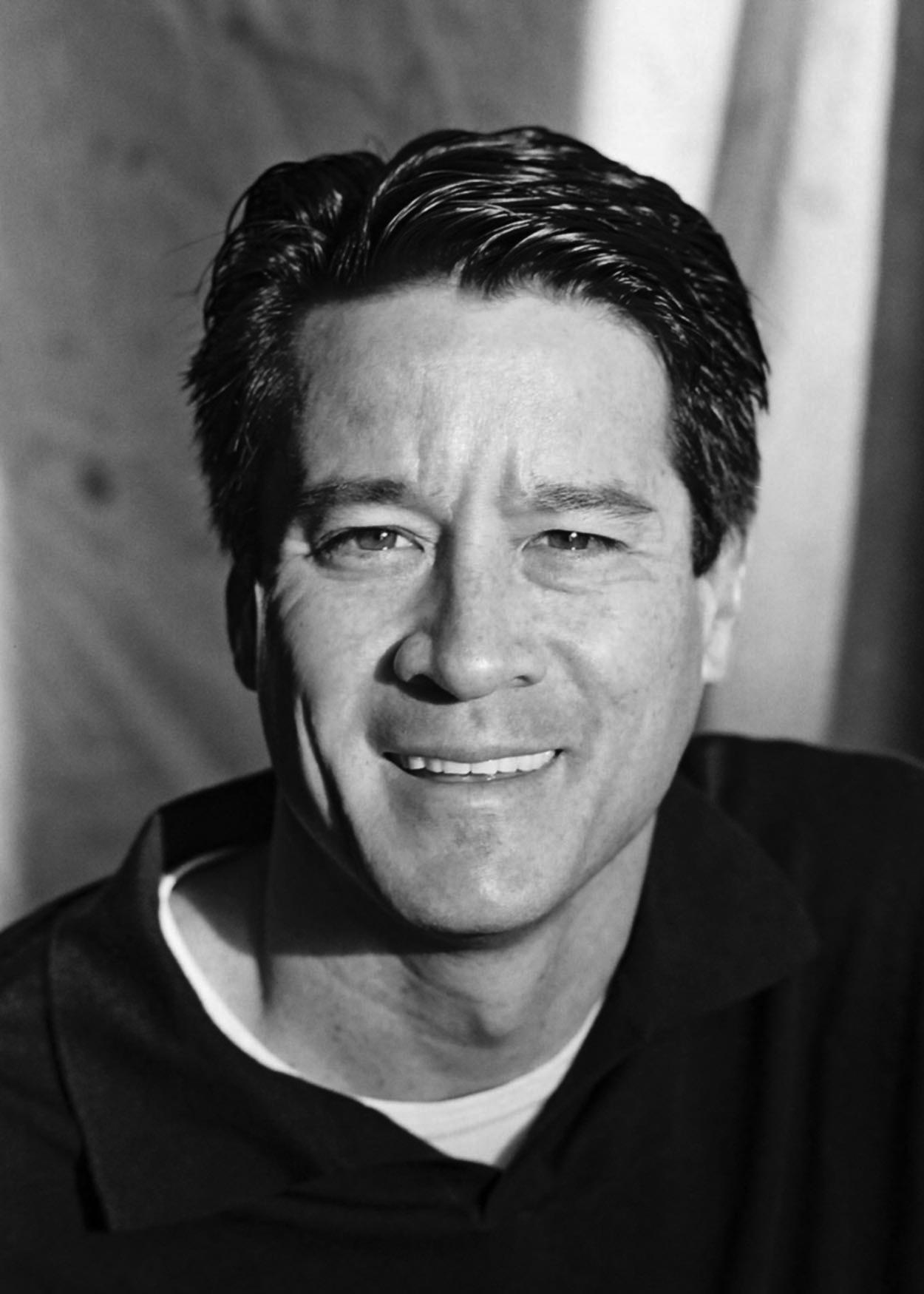

Keynote Speaker, Eric Horvitz, Microsoft

Eric Horvitz is a distinguished scientist and managing director at the Microsoft Research Lab at Redmond, Washington. His interests span theoretical and practical challenges with machine learning, inference, and decision making. He has been elected a fellow of AAAI, AAAS, the American Academy of Arts and Sciences, and the National Academy of Engineering, and has been inducted into the CHI Academy. He has served as president of the AAAI, chair of the AAAS Section on Information, Computing, and Communications, and on the NSF CISE Advisory Committee.

Abstract: I will share reflections on promising directions for engaging human intellect and effort at multiple touchpoints in machine learning, including mechanisms for interaction, supervision, and decision support. I will frame opportunities with studies on harnessing the complementary intellect of people and machines, guiding human effort for supervision, interactively refining learning and inference, and generating explanations and visualizations. I will conclude with thoughts on aiming machine learning at human cognition, with an eye on applications and services that leverage inferences about cognitive affordances and biases.

Title: Algorithmic Trading and Machine Learning

June 23, 2014 at 8:30am

Keynote Speaker, Michael Kearns, University of Pennsylvania

Michael Kearns is a professor in the Computer and Information Science department at the University of Pennsylvania, where he holds the National Center Chair and has joint appointments in the Wharton School.He is founder of Penn's Networked and Social Systems Engineering (NETS) program (www.nets.upenn.edu),and director of Penn's Warren Center for Network and Data Sciences (www.warrencenter.upenn.edu). His research interests include topics in machine learning, algorithmic game theory, social networks, and computational finance. He has consulted extensively in the technology and finance industries.

Abstract: Traditional financial markets have undergone rapid technological change due to increased automation and the introduction of new mechanisms. Such changes have brought with them challenging new problems in algorithmic trading, many of which invite a machine learning approach. In this talk I will examine several algorithmic trading problems, focusing on their novel ML aspects, including limiting market impact, dealing with censored data, and incorporating risk considerations.

Title: On the Computational and Statistical Interface and "Big Data"

June 24, 2014 at 8:30am

Keynote Speaker, Michael I. Jordan, University of California, Berkeley

Michael I. Jordan is the Pehong Chen Distinguished Professor in the Department of Electrical Engineering and Computer Science and the Department of Statistics at the University of California, Berkeley., His research interests bridge the computational, statistical, cognitive and biological sciences, and have focused in recent years on Bayesian nonparametric analysis, probabilistic graphical models, spectral methods, kernel machines and applications to problems in distributed computing systems, natural language processing, signal processing and statistical genetics. Prof. Jordan is a member of the National Academy of Sciences, a member of the National Academy of Engineering and a member of the American Academy of Arts and Sciences. He is a Fellow of the American Association for the Advancement of Science., He has been named a Neyman Lecturer and a Medallion Lecturer by the Institute of Mathematical Statistics, and has received the ACM/AAAI Allen Newell Award. He is a Fellow of the AAAI, ACM, ASA, CSS, IMS, IEEE and SIAM.

Abstract: The rapid growth in the size and scope of datasets in science and technology has created a need for novel foundational perspectives on data analysis that blend the statistical and computational sciences. That classical perspectives from these fields are not adequate to address emerging problems in "Big Data" is apparent from their sharply divergent nature at an elementary level---in computer science, the growth of the number of data points is a source of "complexity" that must be tamed via algorithms or hardware, whereas in statistics, the growth of the number of data points is a source of "simplicity" in that inferences are generally stronger and asymptotic results or concentration theorems can be invoked. We present several research vignettes on topics at the computation/statistics interface, an interface that we aim to characterize in terms of theoretical tradeoffs between statistical risk, amount of data and "externalities" such as computation, communication and privacy. [Joint work with Venkat Chandrasekaran, John Duchi, Martin Wainwright and Yuchen Zhang.]