optimization

-

Jason Weston and Ron Weiss and Hector Yee

Affinity Weighted Embedding (pdf)

Supervised linear embedding models like Wsabie (Weston et al., 2011) and supervised semantic indexing (Bai et al., 2010) have proven successful at ranking, recommendation and annotation tasks. However, despite being scalable to large datasets they do not take full advantage of the extra data due to their linear nature, and we believe they typically underfit. We propose a new class of models which aim to provide improved performance while retaining many of the benefits of the existing class of embedding models. Our approach works by reweighting each component of the embedding of features and labels with a potentially nonlinear affinity function. We describe several variants of the family, and show its usefulness on several datasets.

-

Yuan Zhou and Xi Chen and Jian Li

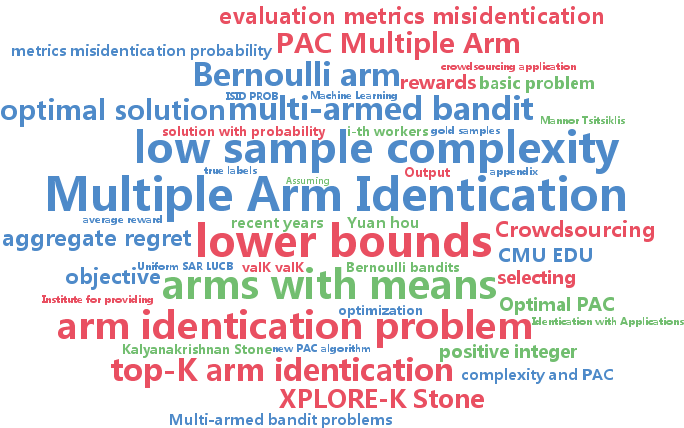

Optimal PAC Multiple Arm Identification with Applications to Crowdsourcing (pdf)

We study the problem of selecting $K$ arms with the highest expected rewards in a stochastic $N$-armed bandit game. Instead of using existing evaluation metrics (e.g., misidentification probability or the metric in EXPLORE-K), we propose to use the aggregate regret, which is defined as the gap between the average reward of the optimal solution and that of our solution. Besides being a natural metric by itself, we argue that in many applications, such as our motivating example from crowdsourcing, the aggregate regret bound is more suitable. We propose a new PAC algorithm, which, with probability at least $1-\delta$, identifies a set of $K$ arms with regret at most $\epsilon$. We provide the sample complexity bound of our algorithm. To complement, we establish the lower bound and show that the sample complexity of our algorithm matches the lower bound. Finally, we report experimental results on both synthetic and real data sets, which demonstrates the superior performance of the proposed algorithm.

-

Megasthenis Asteris and Dimitris Papailiopoulos and Alexandros Dimakis

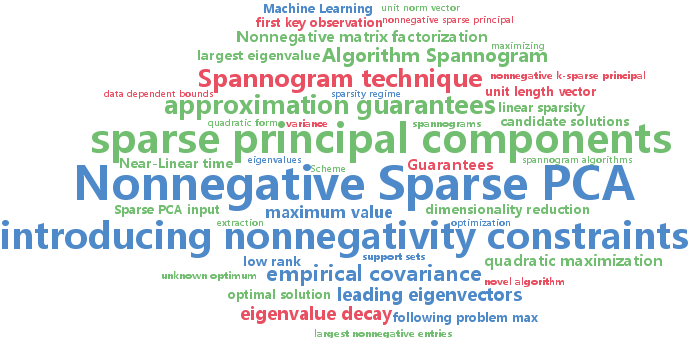

Nonnegative Sparse PCA with Provable Guarantees (pdf)

We introduce a novel algorithm to compute nonnegative sparse principal components of positive semidefinite (PSD) matrices. Our algorithm comes with approximation guarantees contingent on the spectral profile of the input matrix A: the sharper the eigenvalue decay, the better the approximation quality. If the eigenvalues decay like any asymptotically vanishing function, we can approximate nonnegative sparse PCA within any accuracy $\epsilon$ in time polynomial in the matrix size $n$ and desired sparsity k, but not in $1/\epsilon$. Further, we obtain a data-dependent bound that is computed by executing an algorithm on a given data set. This bound is significantly tighter than a-priori bounds and can be used to show that for all tested datasets our algorithm is provably within 40%-90% from the unknown optimum. Our algorithm is combinatorial and explores a subspace defined by the leading eigenvectors of A. We test our scheme on several data sets, showing that it matches or outperforms the previous state of the art.

-

Elad Eban and Elad Mezuman and Amir Globerson

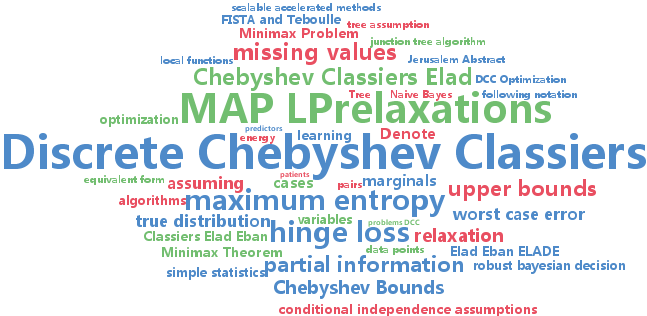

Discrete Chebyshev Classifiers (pdf)

In large scale learning problems it is often easy to collect simple statistics of the data, but hard or impractical to store all the original data. A key question in this setting is how to construct classifiers based on such partial information. One traditional approach to the problem has been to use maximum entropy arguments to induce a complete distribution on variables from statistics. However, this approach essentially makes conditional independence assumptions about the distribution, and furthermore does not optimize prediction loss. Here we present a framework for discriminative learning given a set of statistics. Specifically, we address the case where all variables are discrete and we have access to various marginals. Our approach minimizes the worst case hinge loss in this case, which upper bounds the generalization error. We show that for certain sets of statistics the problem is tractable, and in the general case can be approximated using MAP LP relaxations. Empirical results show that the method is competitive with other approaches that use the same input.

-

Marco Cuturi and Arnaud Doucet

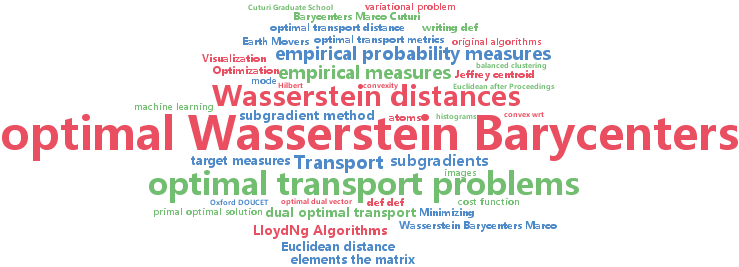

Fast Computation of Wasserstein Barycenters (pdf)

We present new algorithms to compute the mean of a set of $N$ empirical probability measures under the optimal transport metric. This mean, known as the Wasserstein barycenter~\citep{agueh2011barycenters,rabin2012

-

Yasuhiro Fujiwara and Go Irie

Efficient Label Propagation (pdf)

Label propagation is a popular graph-based semi-supervised learning framework. So as to obtain the optimal labeling scores, the label propagation algorithm requires an inverse matrix which incurs the high computational cost of O(n^3+cn^2), where n and c are the numbers of data points and labels, respectively. This paper proposes an efficient label propagation algorithm that guarantees exactly the same labeling results as those yielded by optimal labeling scores. The key to our approach is to iteratively compute lower and upper bounds of labeling scores to prune unnecessary score computations. This idea significantly reduces the computational cost to O(cnt) where t is the average number of iterations for each label and t << n in practice. Experiments demonstrate the significant superiority of our algorithm over existing label propagation methods.

-

Aaron Defazio and Justin Domke and tiberio Caetano

Finito: A faster, permutable incremental gradient method for big data problems (pdf)

Recent advances in optimization theory have shown that smooth strongly convex finite sums can be minimized faster than by treating them as a black box "batch" problem. In this work we introduce a new method in this class with a theoretical convergence rate four times faster than existing methods, for sums with sufficiently many terms. This method is also amendable to a sampling without replacement scheme that in practice gives further speed-ups. We give empirical results showing state of the art performance.

-

Arun Rajkumar and Shivani Agarwal

A Statistical Convergence Perspective of Algorithms for Rank Aggregation from Pairwise Data (pdf)

There has been much interest recently in the problem of rank aggregation from pairwise data. A natural question that arises is: under what sorts of statistical assumptions do various rank aggregation algorithms converge to an `optimal' ranking? In this paper, we consider this question in a natural setting where pairwise comparisons are drawn randomly and independently from some underlying probability distribution. We first show that, under a `time-reversibility' or Bradley-Terry-Luce (BTL) condition on the distribution generating the outcomes of the pairwise comparisons, the rank centrality (PageRank) and least squares (HodgeRank) algorithms both converge to an optimal ranking. Next, we show that a matrix version of the Borda count algorithm, and more surprisingly, an algorithm which performs maximal likelihood estimation under a BTL assumption, both converge to an optimal ranking under a `low-noise' condition that is strictly more general than BTL. Finally, we propose a new SVM-based algorithm for rank aggregation from pairwise data, and show that this converges to an optimal ranking under an even more general condition that we term `generalized low-noise'. In all cases, we provide explicit sample complexity bounds for exact recovery of an optimal ranking. Our experiments confirm our theoretical findings and help to shed light on the statistical behavior of various rank aggregation algorithms.

-

Tasuku Soma and Naonori Kakimura and Kazuhiro Inaba and Ken-ichi Kawarabayashi

Optimal Budget Allocation: Theoretical Guarantee and Efficient Algorithm (pdf)

We consider the budget allocation problem over bipartite influence model proposed by Alon et al. This problem can be viewed as the well-known influence maximization problem with budget constraints. We first show that this problem and its much more general form fall into a general setting; namely the monotone submodular function maximization over integer lattice subject to a knapsack constraint. Our framework includes Alon et al.'s model, even with a competitor and with cost. We then give a (1-1/e)-approximation algorithm for this more general problem. Furthermore, when influence probabilities are nonincreasing, we obtain a faster (1-1/e)-approximation algorithm, which runs essentially in linear time in the number of nodes. This allows us to implement our algorithm up to almost 10M edges (indeed, our experiments tell us that we can implement our algorithm up to 1 billion edges. It would approximately take us only 500 seconds.).

-

Gavin Taylor and Connor Geer and David Piekut

An Analysis of State-Relevance Weights and Sampling Distributions on L1-Regularized Approximate Linear Programming Approximation Accuracy (pdf)

Recent interest in the use of $L_1$ regularization in the use of value function approximation includes Petrik et al.'s introduction of $L_1$-Regularized Approximate Linear Programming (RALP). RALP is unique among $L_1$-regularized approaches in that it approximates the optimal value function using off-policy samples. Additionally, it produces policies which outperform those of previous methods, such as LSPI. RALP's value function approximation quality is affected heavily by the choice of state-relevance weights in the objective function of the linear program, and by the distribution from which samples are drawn; however, there has been no discussion of these considerations in the previous literature. In this paper, we discuss and explain the effects of choices in the state-relevance weights and sampling distribution on approximation quality, using both theoretical and experimental illustrations. The results provide insight not only onto these effects, but also provide intuition into the types of MDPs which are especially well suited for approximation with RALP.