numerical experiments

-

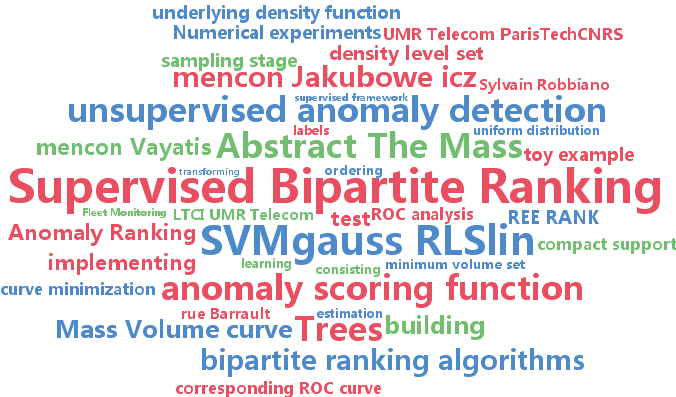

Stephan Clémençon and Sylvain Robbiano

Anomaly Ranking as Supervised Bipartite Ranking (pdf)

The Mass Volume (MV) curve is a visual tool to evaluate the performance of a scoring function with regard to its capacity to rank data in the same order as the underlying den- sity function. Anomaly ranking refers to the unsupervised learning task which consists in building a scoring function, based on unla- beled data, with a MV curve as low as pos- sible at any point. In this paper, it is proved that, in the case where the data generat- ing probability distribution has compact sup- port, anomaly ranking is equivalent to (su- pervised) bipartite ranking, where the goal is to discriminate between the underlying prob- ability distribution and the uniform distribu- tion with same support. In this situation, the MV curve can be then seen as a simple trans- form of the corresponding ROC curve. Ex- ploiting this view, we then show how to use bipartite ranking algorithms, possibly com- bined with random sampling, to solve the MV curve minimization problem. Numeri- cal experiments based on a variety of bipar- tite ranking algorithms well-documented in the literature are displayed in order to illus- trate the relevance of our approach.

-

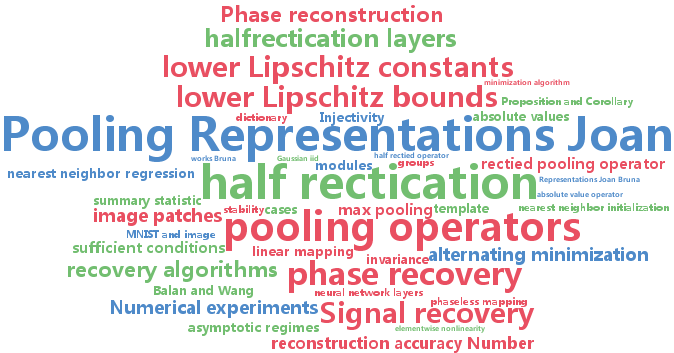

Joan Bruna Estrach and Arthur Szlam and Yann LeCun

Signal recovery from Pooling Representations (pdf)

Pooling operators construct non-linear representations by cascading a redundant linear transform, followed by a point-wise nonlinearity and a local aggregation, typically implemented with a $\ell_p$ norm. Their efficiency in recognition architectures is based on their ability to locally contract the input space, but also on their capacity to retain as much stable information as possible. We address this latter question by computing the upper and lower Lipschitz bounds of $\ell_p$ pooling operators for $p=1, 2, \infty$ as well as their half-rectified equivalents, which give sufficient conditions for the design of invertible pooling layers. Numerical experiments on MNIST and image patches confirm that pooling layers can be inverted with phase recovery algorithms. Moreover, the regularity of the inverse pooling, controlled by the lower Lipschitz constant, is empirically verified with a nearest neighbor regression.

-

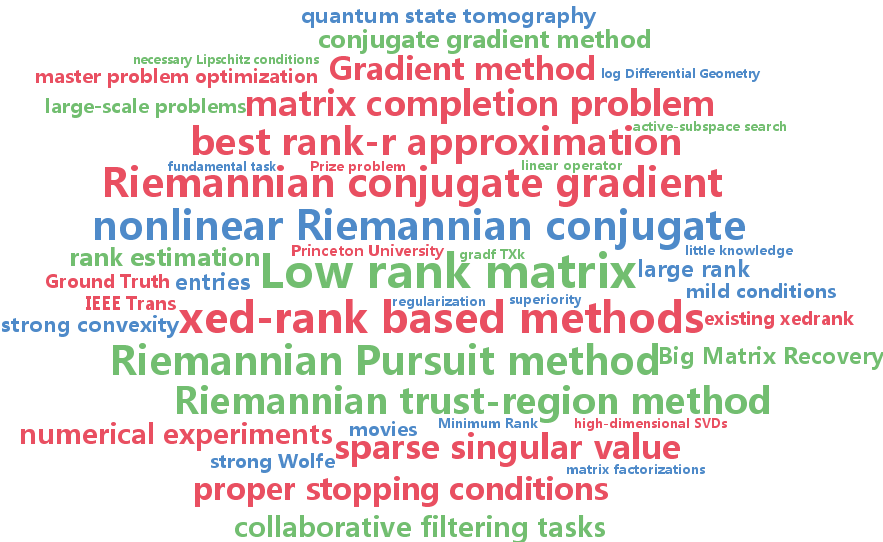

Mingkui Tan and Ivor W. Tsang and Li Wang and Bart Vandereycken and Sinno Jialin Pan

Riemannian Pursuit for Big Matrix Recovery (pdf)

Low rank matrix recovery is a fundamental task in many real-world applications. The performance of existing methods, however, deteriorates significantly when applied to ill-conditioned or large-scale matrices. In this paper, we therefore propose an efficient method, called Riemannian Pursuit (RP), that aims to address these two problems simultaneously. Our method consists of a sequence of fixed-rank optimization problems. Each subproblem, solved by a nonlinear Riemannian conjugate gradient method, aims to correct the solution in the most important subspace of increasing size. Theoretically, RP converges linearly under mild conditions and experimental results show that it substantially outperforms existing methods when applied to large-scale and ill-conditioned matrices.

-

Taiji Suzuki

Stochastic Dual Coordinate Ascent with Alternating Direction Method of Multipliers (pdf)

We propose a new stochastic dual coordinate ascent technique that can be applied to a wide range of regularized learning problems. Our method is based on alternating direction method of multipliers (ADMM) to deal with complex regularization functions such as structured regularizations. Although the original ADMM is a batch method, the proposed method offers a stochastic update rule where each iteration requires only one or few sample observations. Moreover, our method can naturally afford mini-batch update and it gives speed up of convergence. We show that, under mild assumptions, our method converges exponentially. The numerical experiments show that our method actually performs efficiently.

-

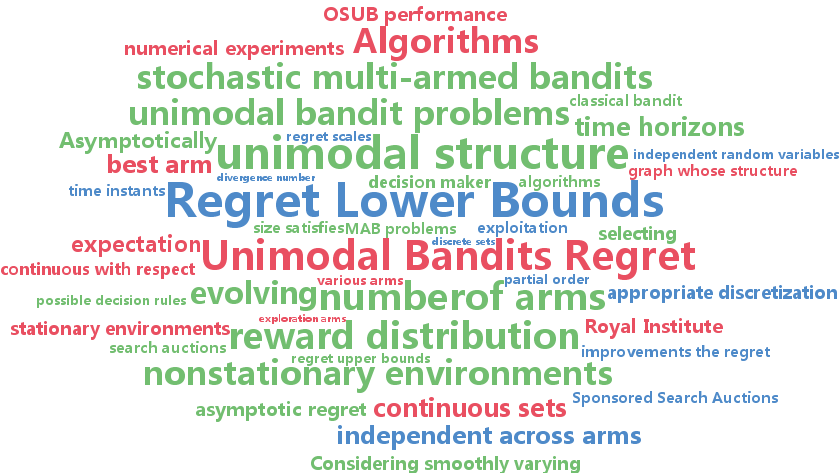

Richard Combes and Alexandre Proutiere

Unimodal Bandits: Regret Lower Bounds and Optimal Algorithms (pdf)

We consider stochastic multi-armed bandits where the expected reward is a unimodal function over partially ordered arms. This important class of problems has been recently investigated in (Cope 2009, Yu 2011). The set of arms is either discrete, in which case arms correspond to the vertices of a finite graph whose structure represents similarity in rewards, or continuous, in which case arms belong to a bounded interval. For discrete unimodal bandits, we derive asymptotic lower bounds for the regret achieved under any algorithm, and propose OSUB, an algorithm whose regret matches this lower bound. Our algorithm optimally exploits the unimodal structure of the problem, and surprisingly, its asymptotic regret does not depend on the number of arms. We also provide a regret upper bound for OSUB in non-stationary environments where the expected rewards smoothly evolve over time. The analytical results are supported by numerical experiments showing that OSUB performs significantly better than the state-of-the-art algorithms. For continuous sets of arms, we provide a brief discussion. We show that combining an appropriate discretization of the set of arms with the UCB algorithm yields an order-optimal regret, and in practice, outperforms recently proposed algorithms designed to exploit the unimodal structure.

-

Odalric-Ambrym Maillard and Shie Mannor

Latent Bandits. (pdf)

We consider a multi-armed bandit problem where the reward distributions are indexed by two sets --one for arms, one for type-- and can be partitioned into a small number of clusters according to the type. First, we consider the setting where all reward distributions are known and all types have the same underlying cluster, the type's identity is, however, unknown. Second, we study the case where types may come from different classes, which is significantly more challenging. Finally, we tackle the case where the reward distributions are completely unknown. In each setting, we introduce specific algorithms and derive non-trivial regret performance. Numerical experiments show that, in the most challenging agnostic case, the proposed algorithm achieves excellent performance in several difficult scenarios.