neural nets

-

Sanjeev Arora and Aditya Bhaskara and Rong Ge and Tengyu Ma

Provable Bounds for Learning Some Deep Representations (pdf)

We give algorithms with provable guarantees that learn a class of deep nets in the generative model view popularized by Hinton and others. Our generative model is an n node multilayer neural net that has degree at most $n^{\gamma

-

Diederik Kingma and Max Welling

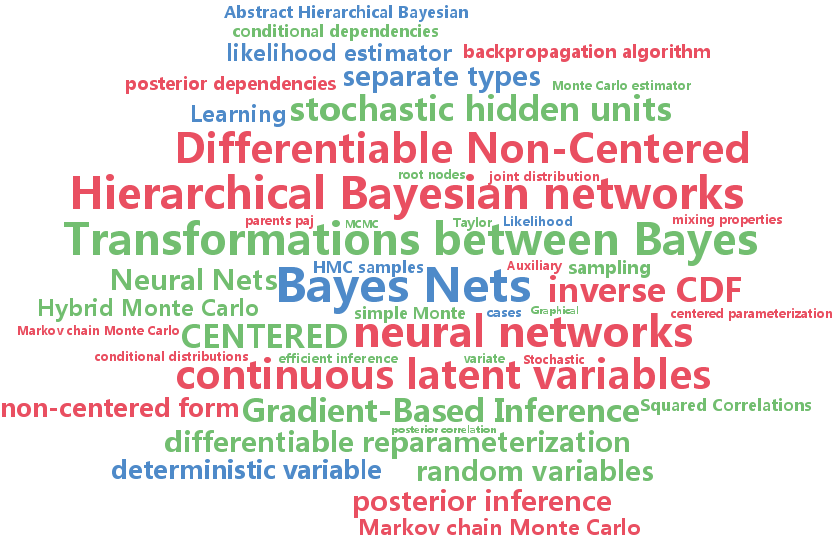

Efficient Gradient-Based Inference through Transformations between Bayes Nets and Neural Nets (pdf)

Hierarchical Bayesian networks and neural networks with stochastic hidden units are commonly perceived as two separate types of models. We show that either of these types of models can often be transformed into an instance of the other, by switching between centered and differentiable non-centered parameterizations of the latent variables. The choice of parameterization greatly influences the efficiency of gradient-based posterior inference; we show that they are often complementary to eachother, we clarify when each parameterization is preferred and show how inference can be made robust. In the non-centered form, a simple Monte Carlo estimator of the marginal likelihood can be used for learning the parameters. Theoretical results are supported by experiments.