joint distribution

-

David Inouye and Pradeep Ravikumar and Inderjit Dhillon

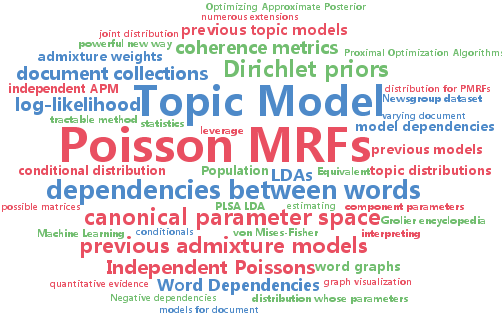

Admixture of Poisson MRFs: A Topic Model with Word Dependencies (pdf)

This paper introduces a new topic model based on an admixture of Poisson Markov Random Fields (APM), which can model dependencies between words as opposed to previous independent topic models such as PLSA (Hofmann, 1999), LDA (Blei et al., 2003) or SAM (Reisinger et al., 2010). We propose a class of admixture models that generalizes previous topic models and show an equivalence between the conditional distribution of LDA and independent Poissons—suggesting that APM subsumes the modeling power of LDA. We present a tractable method for estimating the parameters of an APM based on the pseudo log-likelihood and demonstrate the benefits of APM over previous models by preliminary qualitative and quantitative experiments.

-

Le Song and Animashree Anandkumar and Bo Dai and Bo Xie

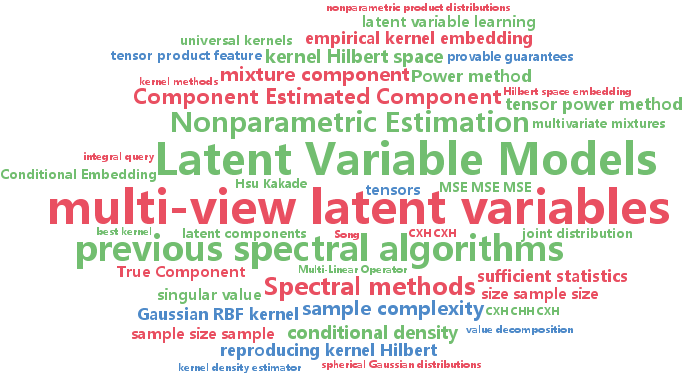

Nonparametric Estimation of Multi-View Latent Variable Models (pdf)

Spectral methods have greatly advanced the estimation of latent variable models, generating a sequence of novel and efficient algorithms with strong theoretical guarantees. However, current spectral algorithms are largely restricted to mixtures of discrete or Gaussian distributions. In this paper, we propose a kernel method for learning multi-view latent variable models, allowing each mixture component to be nonparametric and learned from data in an unsupervised fashion. The key idea of our method is to embed the joint distribution of a multi-view latent variable model into a reproducing kernel Hilbert space, and then the latent parameters are recovered using a robust tensor power method. We establish that the sample complexity for the proposed method is quadratic in the number of latent components and is a low order polynomial in the other relevant parameters. Thus, our nonparametric tensor approach to learning latent variable models enjoys good sample and computational efficiencies. As a special case of our framework, we also obtain a first unsupervised conditional density estimator of the kind with provable guarantees. In both synthetic and real world datasets, the nonparametric tensor power method compares favorably to EM algorithm and other spectral algorithms.

-

Mathias Niepert and Pedro Domingos

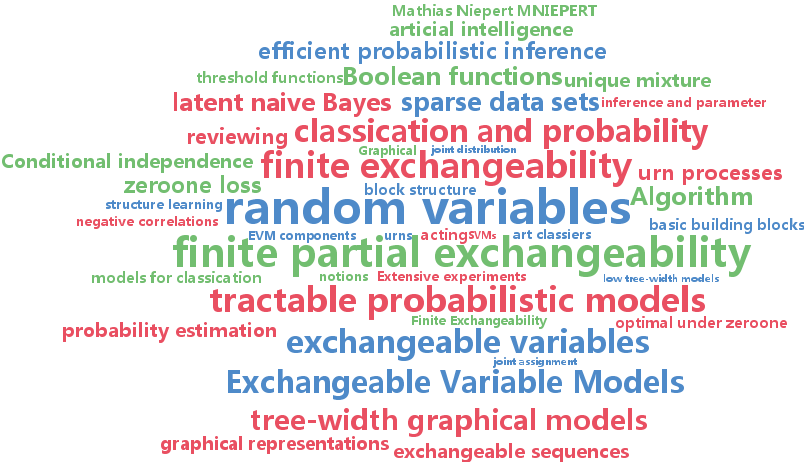

Exchangeable Variable Models (pdf)

A sequence of random variables is exchangeable if its joint distribution is invariant under variable permutations. We introduce exchangeable variable models (EVMs) as a novel class of probabilistic models whose basic building blocks are partially exchangeable sequences, a generalization of exchangeable sequences. We prove that a family of tractable EVMs is optimal under zero-one loss for a large class of functions, including parity and threshold functions, and strictly subsumes existing tractable independence-based model families. Extensive experiments show that EVMs outperform state of the art classifiers such as SVMs and probabilistic models which are solely based on independence assumptions.

-

Sergey Bartunov and Dmitry Vetrov

Variational Inference for Sequential Distance Dependent Chinese Restaurant Process (pdf)

Recently proposed distance dependent Chinese Restaurant Process (ddCRP) generalizes extensively used Chinese Restaurant Process (CRP) by accounting for dependencies between data points. Its posterior is intractable and so far only MCMC methods were used for inference. Because of very different nature of ddCRP no prior developments in variational methods for Bayesian nonparametrics are appliable. In this paper we propose novel variational inference for important sequential case of ddCRP (seqddCRP) by revealing its connection with Laplacian of random graph constructed by the process. We develop efficient algorithm for optimizing variational lower bound and demonstrate its efficiency comparing to Gibbs sampler. We also apply our variational approximation to CRP-equivalent seqddCRP-mixture model, where it could be considered as alternative to one based on truncated stick-breaking representation. This allowed us to achieve significantly better variational lower bound than variational approximation based on truncated stick breaking for Dirichlet process.

-

Yoshua Bengio and Eric Laufer and Guillaume Alain and Jason Yosinski

Deep Generative Stochastic Networks Trainable by Backprop (pdf)

We introduce a novel training principle for probabilistic models that is an alternative to maximum likelihood. The proposed Generative Stochastic Networks (GSN) framework is based on learning the transition operator of a Markov chain whose stationary distribution estimates the data distribution. Because the transition distribution is a conditional distribution generally involving a small move, it has fewer dominant modes, being unimodal in the limit of small moves. Thus, it is easier to learn, more like learning to perform supervised function approximation, with gradients that can be obtained by backprop. The theorems provided here generalize recent work on the probabilistic interpretation of denoising autoencoders and provide an interesting justification for dependency networks and generalized pseudolikelihood (along with defining an appropriate joint distribution and sampling mechanism, even when the conditionals are not consistent). GSNs can be used with missing inputs and can be used to sample subsets of variables given the rest. Successful experiments are conducted, validating these theoretical results, on two image datasets and with a particular architecture that mimics the Deep Boltzmann Machine Gibbs sampler but allows training to proceed with backprop, without the need for layerwise pretraining.

-

David Barber and Yali Wang

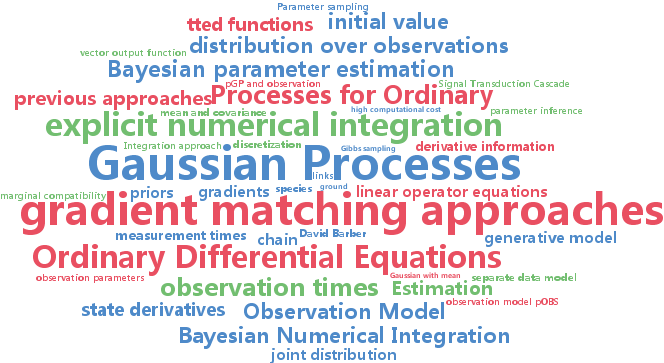

Gaussian Processes for Bayesian Estimation in Ordinary Differential Equations (pdf)

Bayesian parameter estimation in coupled ordinary differential equations (ODEs) is challenging due to the high computational cost of numerical integration. In gradient matching a separate data model is introduced with the property that its gradient can be calculated easily. Parameter estimation is achieved by requiring consistency between the gradients computed from the data model and those specified by the ODE. We propose a Gaussian process model that directly links state derivative information with system observations, simplifying previous approaches and providing a natural generative model.

-

Alexander Novikov and Anton Rodomanov and Anton Osokin and Dmitry Vetrov

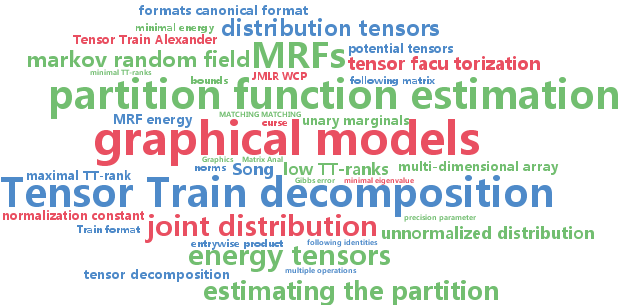

Putting MRFs on a Tensor Train (pdf)

In the paper we present a new framework for dealing with probabilistic graphical models. Our approach relies on the recently proposed Tensor Train format (TT-format) of a tensor that while being compact allows for efficient application of linear algebra operations. We present a way to convert the energy of a Markov random field to the TT-format and show how one can exploit the properties of the TT-format to attack the tasks of the partition function estimation and the MAP-inference. We provide theoretical guarantees on the accuracy of the proposed algorithm for estimating the partition function and compare our methods against several state-of-the-art algorithms.

-

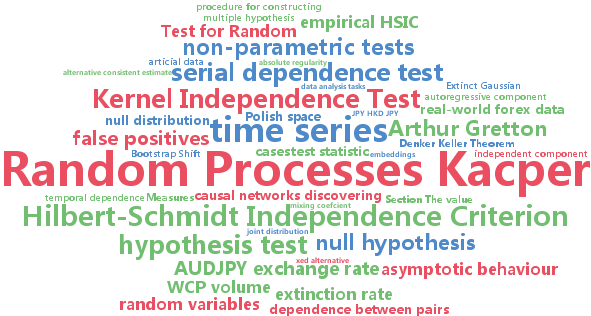

Kacper Chwialkowski and Arthur Gretton

A Kernel Independence Test for Random Processes (pdf)

A non-parametric approach to the problem of testing the independence of two random processes is developed. The test statistic is the Hilbert-Schmidt Independence Criterion (HSIC), which was used previously in testing independence for i.i.d. pairs of variables. The asymptotic behaviour of HSIC is established when computed from samples drawn from random processes. It is shown that earlier bootstrap procedures which worked in the i.i.d. case will fail for random processes, and an alternative consistent estimate of the p-values is proposed. Tests on artificial data and real-world forex data indicate that the new test procedure discovers dependence which is missed by linear approaches, while the earlier bootstrap procedure returns an elevated number of false positives.

-

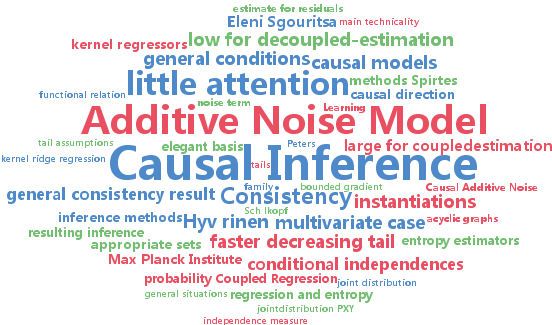

Samory Kpotufe and Eleni Sgouritsa and Dominik Janzing and Bernhard Schoelkopf

Consistency of Causal Inference under the Additive Noise Model (pdf)

We analyze a family of methods for statistical causal inference from sample under the so-called Additive Noise Model. While most work on the subject has concentrated on establishing the soundness of the Additive Noise Model, the statistical consistency of the resulting inference methods has received little attention. We derive general conditions under which the given family of inference methods consistently infers the causal direction in a nonparametric setting.

-

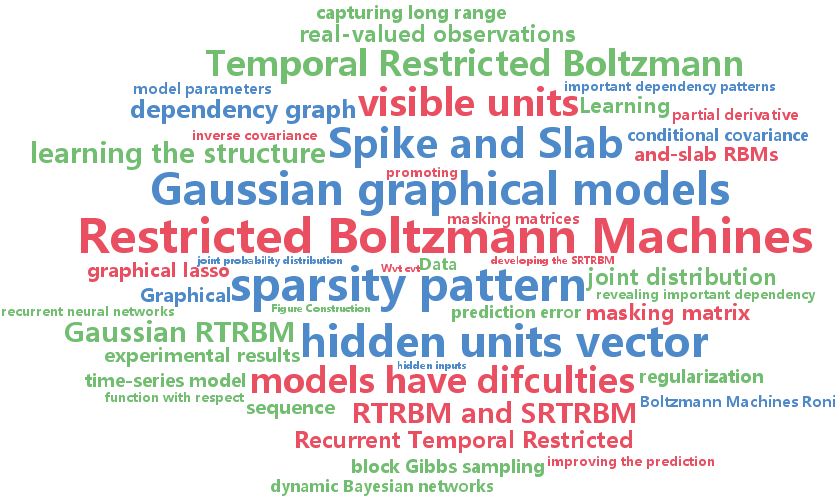

Roni Mittelman and Benjamin Kuipers and Silvio Savarese and Honglak Lee

Structured Recurrent Temporal Restricted Boltzmann Machines (pdf)

The Recurrent temporal restricted Boltzmann machine (RTRBM) is a probabilistic model for temporal data, that has been shown to effectively capture both short and long-term dependencies in time-series. The topology of the RTRBM graphical model, however, assumes full connectivity between all the pairs of visible and hidden units, therefore ignoring the dependency structure between the different observations. Learning this structure has the potential to not only improve the prediction performance, but it can also reveal important patterns in the data. For example, given an econometric dataset, we could identify interesting dependencies between different market sectors; given a meteorological dataset, we could identify regional weather patterns. In this work we propose a new class of RTRBM, which explicitly uses a dependency graph to model the structure in the problem and to define the energy function. We refer to the new model as the structured RTRBM (SRTRBM). Our technique is related to methods such as graphical lasso, which are used to learn the topology of Gaussian graphical models. We also develop a spike-and-slab version of the RTRBM, and combine it with our method to learn structure in datasets with real valued observations. Our experimental results using synthetic and real datasets, demonstrate that the SRTRBM can improve the prediction performance of the RTRBM, particularly when the number of visible units is large and the size of the training set is small. It also reveals the structure underlying our benchmark datasets.

-

Diederik Kingma and Max Welling

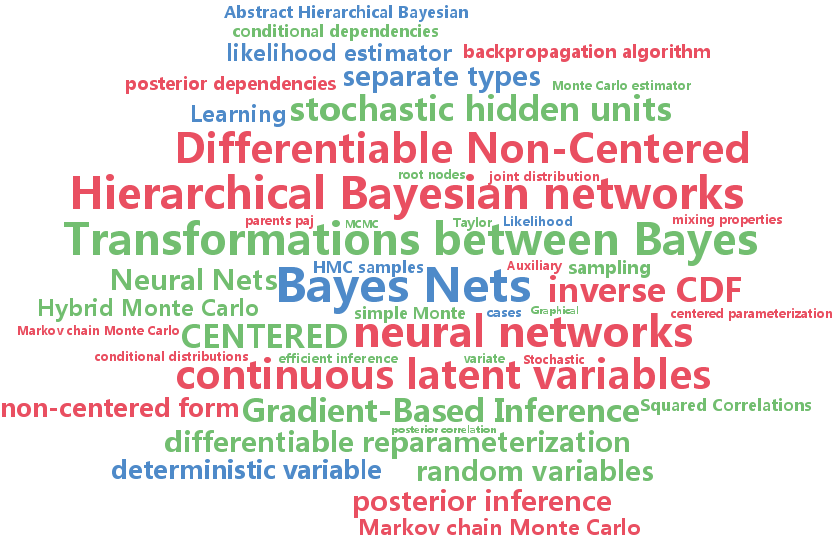

Efficient Gradient-Based Inference through Transformations between Bayes Nets and Neural Nets (pdf)

Hierarchical Bayesian networks and neural networks with stochastic hidden units are commonly perceived as two separate types of models. We show that either of these types of models can often be transformed into an instance of the other, by switching between centered and differentiable non-centered parameterizations of the latent variables. The choice of parameterization greatly influences the efficiency of gradient-based posterior inference; we show that they are often complementary to eachother, we clarify when each parameterization is preferred and show how inference can be made robust. In the non-centered form, a simple Monte Carlo estimator of the marginal likelihood can be used for learning the parameters. Theoretical results are supported by experiments.