data

-

Wenzhao Lian and Vinayak Rao and Brian Eriksson and Lawrence Carin

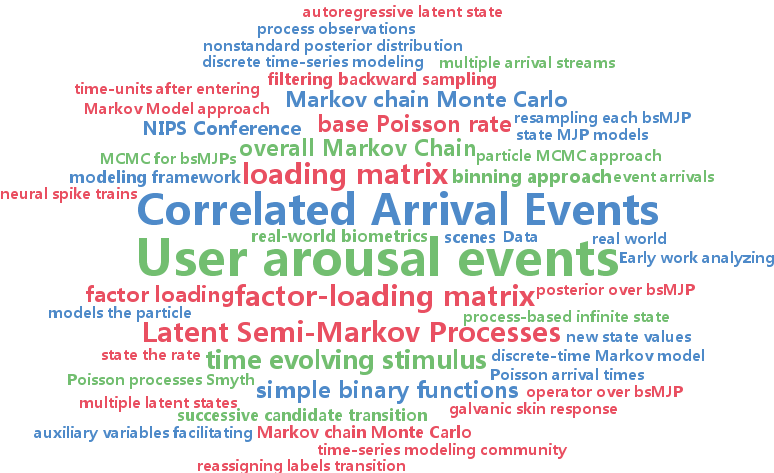

Modeling Correlated Arrival Events with Latent Semi-Markov Processes (pdf)

The analysis and characterization of correlated point process data has wide applications, ranging from biomedical research to network analysis. In this work, we model such data as generated by a latent collection of continuous-time binary semi-Markov processes, corresponding to external events appearing and disappearing. A continuous-time modeling framework is more appropriate for multichannel point process data than a binning approach requiring time discretization, and we show connections between our model and recent ideas from the discrete-time literature. We describe an efficient MCMC algorithm for posterior inference, and apply our ideas to both synthetic data and a real-world biometrics application.

-

Sergey Bartunov and Dmitry Vetrov

Variational Inference for Sequential Distance Dependent Chinese Restaurant Process (pdf)

Recently proposed distance dependent Chinese Restaurant Process (ddCRP) generalizes extensively used Chinese Restaurant Process (CRP) by accounting for dependencies between data points. Its posterior is intractable and so far only MCMC methods were used for inference. Because of very different nature of ddCRP no prior developments in variational methods for Bayesian nonparametrics are appliable. In this paper we propose novel variational inference for important sequential case of ddCRP (seqddCRP) by revealing its connection with Laplacian of random graph constructed by the process. We develop efficient algorithm for optimizing variational lower bound and demonstrate its efficiency comparing to Gibbs sampler. We also apply our variational approximation to CRP-equivalent seqddCRP-mixture model, where it could be considered as alternative to one based on truncated stick-breaking representation. This allowed us to achieve significantly better variational lower bound than variational approximation based on truncated stick breaking for Dirichlet process.

-

Taiji Suzuki

Stochastic Dual Coordinate Ascent with Alternating Direction Method of Multipliers (pdf)

We propose a new stochastic dual coordinate ascent technique that can be applied to a wide range of regularized learning problems. Our method is based on alternating direction method of multipliers (ADMM) to deal with complex regularization functions such as structured regularizations. Although the original ADMM is a batch method, the proposed method offers a stochastic update rule where each iteration requires only one or few sample observations. Moreover, our method can naturally afford mini-batch update and it gives speed up of convergence. We show that, under mild assumptions, our method converges exponentially. The numerical experiments show that our method actually performs efficiently.

-

Yarin Gal and Zoubin Ghahramani

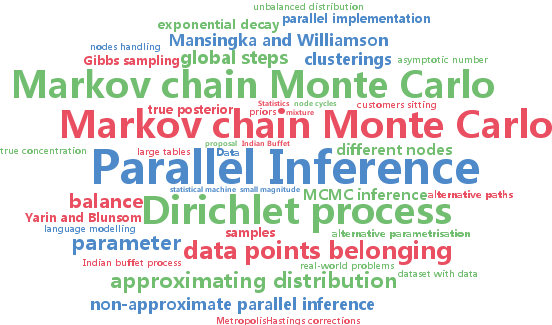

Pitfalls in the use of Parallel Inference for the Dirichlet Process (pdf)

Recent work done by Lovell, Adams, and Mansingka (2012) and Williamson, Dubey, and Xing (2013) has suggested an alternative parametrisation for the Dirichlet process in order to derive non-approximate parallel MCMC inference for it work which has been picked-up and implemented in several different fields. In this paper we show that the approach suggested is impractical due to an extremely unbalanced distribution of the data. We characterise the requirements of efficient parallel inference for the Dirichlet process and show that the proposed inference fails most of these requirements (while approximate approaches often satisfy most of them). We present both theoretical and experimental evidence, analysing the load balance for the inference and showing that it is independent of the size of the dataset and the number of nodes available in the parallel implementation. We end with suggestions of alternative paths of research for efficient non-approximate parallel inference for the Dirichlet process.

-

Nan Du and Yingyu Liang and Maria Balcan and Le Song

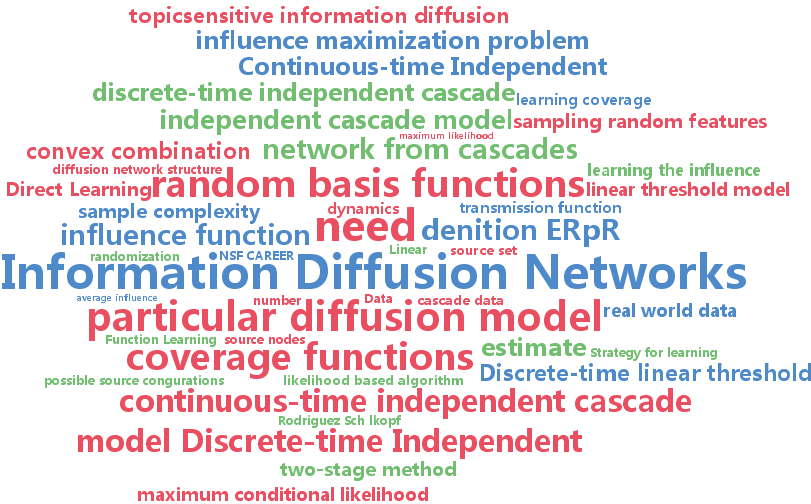

Influence Function Learning in Information Diffusion Networks (pdf)

Can we learn the influence of a set of people in a social network from cascades of information diffusion? This question is often addressed by a two-stage approach: first learn a diffusion model, and then calculate the influence based on the learned model. Thus, the success of this approach relies heavily on the correctness of the diffusion model which is hard to verify for real world data. In this paper, we exploit the insight that the influence functions in many diffusion models are coverage functions, and propose a novel parameterization of such functions using a convex combination of random basis functions. Moreover, we propose an efficient maximum likelihood based algorithm to learn such functions directly from cascade data, and hence bypass the need to specify a particular diffusion model in advance. We provide both theoretical and empirical analysis for our approach, showing that the proposed approach can provably learn the influence function with low sample complexity, be robust to the unknown diffusion models, and significantly outperform existing approaches in both synthetic and real world data.

-

Uri Shalit and Gal Chechik

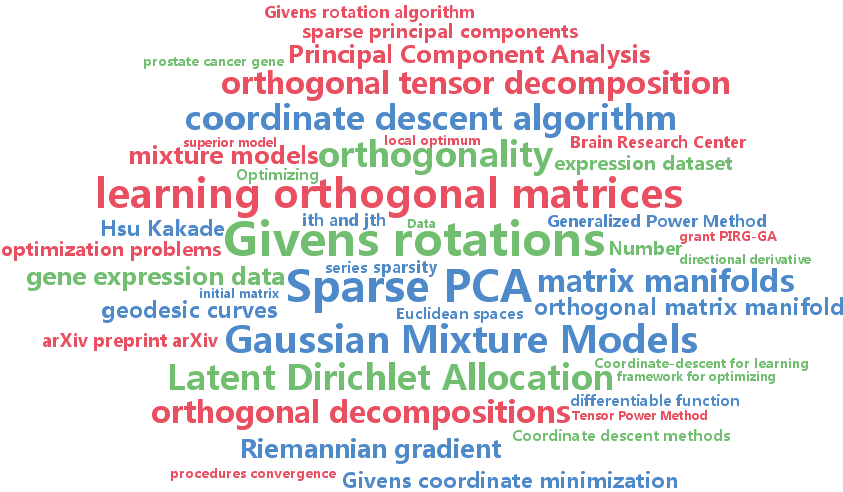

Coordinate-descent for learning orthogonal matrices through Givens rotations (pdf)

Optimizing over the set of orthogonal matrices is a central component in problems like sparse-PCA or tensor decomposition. Unfortunately, such optimization is hard since simple operations on orthogonal matrices easily break orthogonality, and correcting orthogonality usually costs a large amount of computation. Here we propose a framework for optimizing orthogonal matrices, that is the parallel of coordinate-descent in Euclidean spaces. It is based on {\em Givens-rotations

-

Qinxun Bai and Henry Lam and Stan Sclaroff

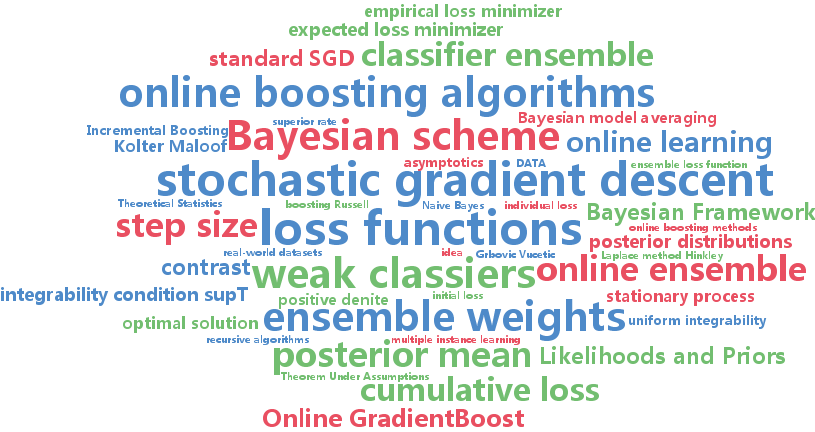

A Bayesian Framework for Online Classifier Ensemble (pdf)

We propose a Bayesian framework for recursively estimating the classifier weights in online learning of a classifier ensemble. In contrast with past methods, such as stochastic gradient descent or online boosting, our framework estimates the weights in terms of evolving posterior distributions. For a specified class of loss functions, we show that it is possible to formulate a suitably defined likelihood function and hence use the posterior distribution as an approximation to the global empirical loss minimizer. If the stream of training data is sampled from a stationary process, we can also show that our framework admits a superior rate of convergence to the expected loss minimizer than is possible with standard stochastic gradient descent. In experiments with real-world datasets, our formulation often performs better than online boosting algorithms.

-

Rajhans Samdani and Kai-Wei Chang and Dan Roth

A Discriminative Latent Variable Model for Online Clustering (pdf)

This paper presents a latent variable structured prediction model for discriminative supervised clustering of items called the Latent Left-linking Model (L3M). We present an online clustering algorithm for L3M based on a feature-based item similarity function. We provide a learning framework for estimating the similarity function and present a fast stochastic gradient-based learning technique. In our experiments on coreference resolution and document clustering, L3 M outperforms several existing online as well as batch supervised clustering techniques.

-

Felix Yu and Sanjiv Kumar and Yunchao Gong and Shih-Fu Chang

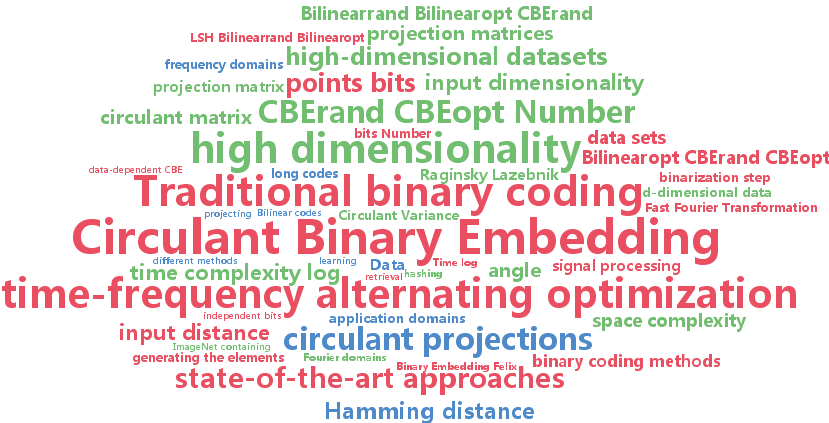

Circulant Binary Embedding (pdf)

Binary embedding of high-dimensional data requires long codes to preserve the discriminative power of the input space. Traditional binary coding methods often suffer from very high computation and storage costs in such a scenario. To address this problem, we propose Circulant Binary Embedding (CBE) which generates binary codes by projecting the data with a circulant matrix. The circulant structure enables the use of Fast Fourier Transformation to speed up the computation. Compared to methods that use unstructured matrices, the proposed method improves the time complexity from $\mathcal{O

-

Justin Solomon and Raif Rustamov and Guibas Leonidas and Adrian Butscher

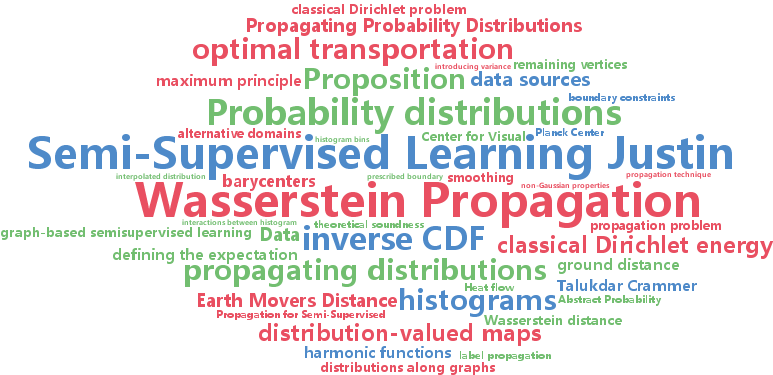

Wasserstein Propagation for Semi-Supervised Learning (pdf)

Probability distributions and histograms are natural representations for product ratings, traffic measurements, and other data considered in many machine learning applications. Thus, this paper introduces a technique for graph-based semi-supervised learning of histograms, derived from the theory of optimal transportation. Our method has several properties making it suitable for this application; in particular, its behavior can be characterized by the moments and shapes of the histograms at the labeled nodes. In addition, it can be used for histograms on non-standard domains like circles, revealing a strategy for manifold-valued semi-supervised learning. We also extend this technique to related problems such as smoothing distributions on graph nodes.

-

Hsiang-Fu Yu and Prateek Jain and Purushottam Kar and Inderjit Dhillon

Large-scale Multi-label Learning with Missing Labels (pdf)

The multi-label classification problem has generated significant interest in recent years. However, existing approaches do not adequately address two key challenges: (a) scaling up to problems with a large number (say millions) of labels, and (b) handling data with missing labels. In this paper, we directly address both these problems by studying the multi-label problem in a generic empirical risk minimization (ERM) framework. Our framework, despite being simple, is surprisingly able to encompass several recent label-compression based methods which can be derived as special cases of our method. To optimize the ERM problem, we develop techniques that exploit the structure of specific loss functions - such as the squared loss function - to obtain efficient algorithms. We further show that our learning framework admits excess risk bounds even in the presence of missing labels. Our bounds are tight and demonstrate better generalization performance for low-rank promoting trace-norm regularization when compared to (rank insensitive) Frobenius norm regularization. Finally, we present extensive empirical results on a variety of benchmark datasets and show that our methods perform significantly better than existing label compression based methods and can scale up to very large datasets such as a Wikipedia dataset that has more than 200,000 labels.

-

Stanislav Minsker and Sanvesh Srivastava and Lizhen Lin and David Dunson

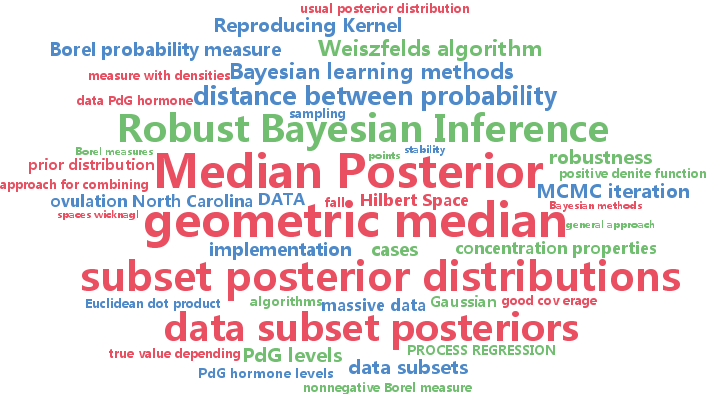

Scalable and Robust Bayesian Inference via the Median Posterior (pdf)

Many Bayesian learning methods for massive data benefit from working with small subsets of observations. In particular, significant progress has been made in scalable Bayesian learning via stochastic approximation. However, Bayesian learning methods in distributed computing environments are often problem- or distribution-specific and use ad hoc techniques. We propose a novel general approach to Bayesian inference that is scalable and robust to corruption in the data. Our technique is based on the idea of splitting the data into several non-overlapping subgroups, evaluating the posterior distribution given each independent subgroup, and then combining the results. The main novelty is the proposed aggregation step which is based on finding the geometric median of posterior distributions. We present both theoretical and numerical results illustrating the advantages of our approach.

-

Roni Mittelman and Benjamin Kuipers and Silvio Savarese and Honglak Lee

Structured Recurrent Temporal Restricted Boltzmann Machines (pdf)

The Recurrent temporal restricted Boltzmann machine (RTRBM) is a probabilistic model for temporal data, that has been shown to effectively capture both short and long-term dependencies in time-series. The topology of the RTRBM graphical model, however, assumes full connectivity between all the pairs of visible and hidden units, therefore ignoring the dependency structure between the different observations. Learning this structure has the potential to not only improve the prediction performance, but it can also reveal important patterns in the data. For example, given an econometric dataset, we could identify interesting dependencies between different market sectors; given a meteorological dataset, we could identify regional weather patterns. In this work we propose a new class of RTRBM, which explicitly uses a dependency graph to model the structure in the problem and to define the energy function. We refer to the new model as the structured RTRBM (SRTRBM). Our technique is related to methods such as graphical lasso, which are used to learn the topology of Gaussian graphical models. We also develop a spike-and-slab version of the RTRBM, and combine it with our method to learn structure in datasets with real valued observations. Our experimental results using synthetic and real datasets, demonstrate that the SRTRBM can improve the prediction performance of the RTRBM, particularly when the number of visible units is large and the size of the training set is small. It also reveals the structure underlying our benchmark datasets.

-

Uri Heinemann and Amir Globerson

Inferning with High Girth Graphical Models (pdf)

Unsupervised learning of graphical models is an important task in many domains. Although maximum likelihood learning is computationally hard, there do exist consistent learning algorithms (e.g., psuedo-likelihood and its variants). However, inference in the learned models is still hard, and thus they are not directly usable. In other words, given a probabilistic query they are not guaranteed to provide an answer that is close to the true one. In the current paper, we provide a learning algorithm that is guaranteed to provide approximately correct probabilistic inference. We focus on a particular class of models, namely high girth graphs in the correlation decay regime. It is well known that approximate inference (e.g, using loopy BP) in such models yields marginals that are close to the true ones. Motivated by this, we propose an algorithm that always returns models of this type, and hence in the models it returns inference is approximately correct. We derive finite sample results guaranteeing that beyond a certain sample size, the resulting models will answer probabilistic queries with a high level of accuracy. Results on synthetic data show that the models we learn indeed outperform those obtained by other algorithms, which do not return high girth graphs.

-

Alekh Agarwal and Sham Kakade and Nikos Karampatziakis and Le Song and Gregory Valiant

Least Squares Revisited: Scalable Approaches for Multi-class Prediction (pdf)

This work provides simple algorithms for multi-class (and multi-label) prediction in settings where both the number of examples $n$ and the data dimension $d$ are relatively large. These robust and parameter free algorithms are essentially iterative least-squares updates and very versatile both in theory and in practice. On the theoretical front, we present several variants with convergence guarantees. Owing to their effective use of second-order structure, these algorithms are substantially better than first-order methods in many practical scenarios. On the empirical side, we show how to scale our approach to high dimensional datasets, achieving dramatic computational speedups over popular optimization packages such as Liblinear and Vowpal Wabbit on standard datasets (MNIST and CIFAR-10), while attaining state-of-the-art accuracies.