convergence rate

-

Adams Wei Yu and Fatma Kilinc-Karzan and Jaime Carbonell

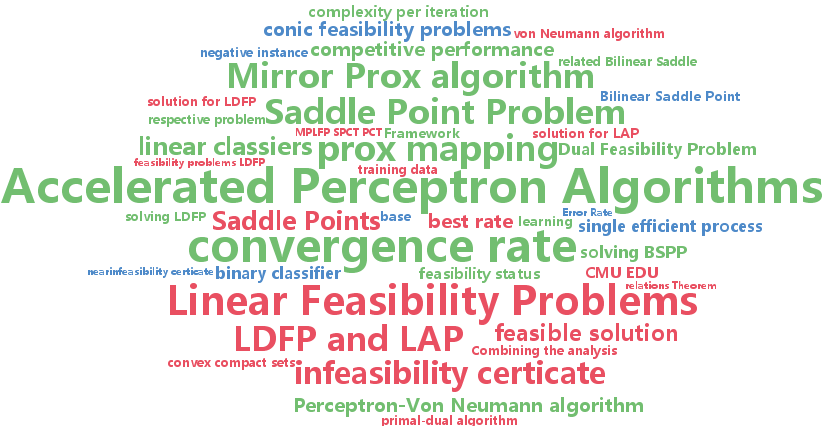

Saddle Points and Accelerated Perceptron Algorithms (pdf)

In this paper, we consider the problem of finding a linear (binary) classifier or providing a near-infeasibility certificate if there is none. We bring a new perspective to addressing these two problems simultaneously in a single efficient process, by investigating a related Bilinear Saddle Point Problem (BSPP). More specifically, we show that a BSPP-based approach provides either a linear classifier or an $\epsilon$-infeasibility certificate. We show that the accelerated primal-dual algorithm, Mirror Prox, can be used for this purpose and achieves the best known convergence rate of $O({\sqrt{\log n

-

Wenliang Zhong and James Kwok

Fast Stochastic Alternating Direction Method of Multipliers (pdf)

We propose a new stochastic alternating direction method of multipliers (ADMM) algorithm, which incrementally approximates the full gradient in the linearized ADMM formulation. Besides having a low per-iteration complexity as existing stochastic ADMM algorithms, it improves the convergence rate on convex problems from $\mO(1/\sqrt{T

-

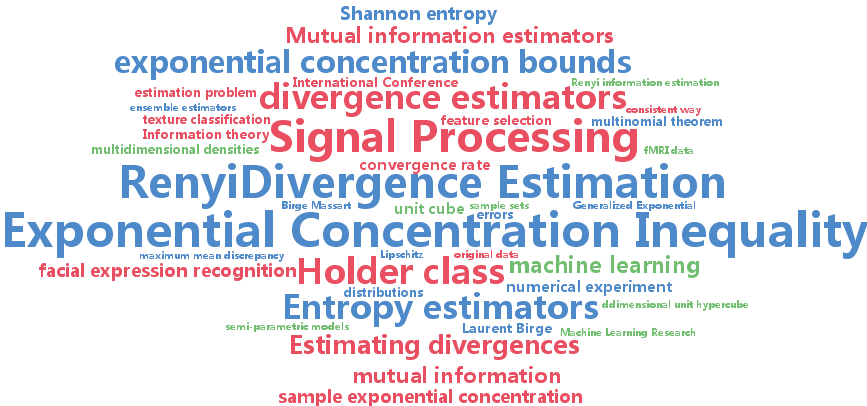

Shashank Singh and Barnabas Poczos

Generalized Exponential Concentration Inequality for Renyi Divergence Estimation (pdf)

Estimating divergences between probability distributions in a consistent way is of great importance in many machine learning tasks. Although this is a fundamental problem in nonparametric statistics, to the best of our knowledge there has been no finite sample exponential inequality convergence bound derived for any divergence estimators. The main contribution of our work is to provide such a bound for an estimator of Renyi divergence for a smooth Holder class of densities on the d-dimensional unit cube. We also illustrate our theoretical results with a numerical experiment.

-

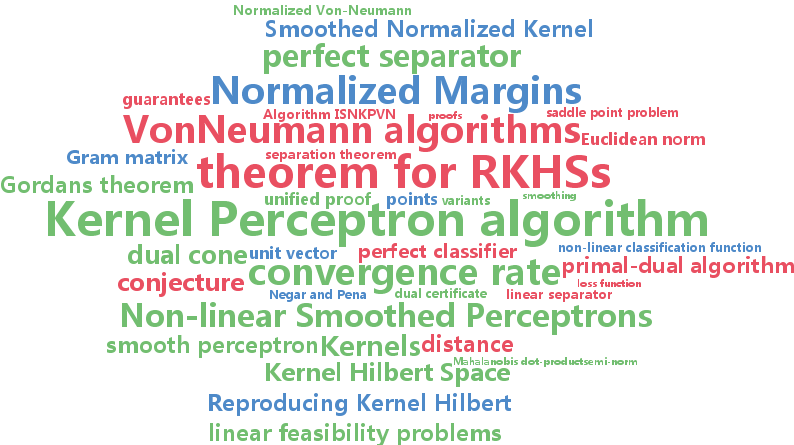

Aaditya Ramdas and Javier Peña

Margins, Kernels and Non-linear Smoothed Perceptrons (pdf)

We focus on the problem of finding a non-linear classification function that lies in a Reproducing Kernel Hilbert Space (RKHS) both from the primal point of view (finding a perfect separator when one exists) and the dual point of view (giving a certificate of non-existence), with special focus on generalizations of two classical schemes - the Perceptron (primal) and Von-Neumann (dual) algorithms. We cast our problem as one of maximizing the regularized normalized hard-margin ($\rho$) in an RKHS and %use the Representer Theorem to rephrase it in terms of a Mahalanobis dot-product/semi-norm associated with the kernel's (normalized and signed) Gram matrix. We derive an accelerated smoothed algorithm with a convergence rate of $\tfrac{\sqrt {\log n

-

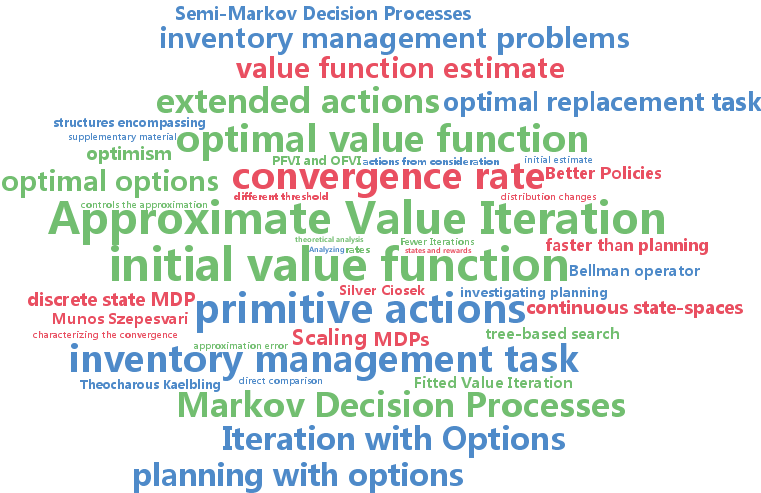

Timothy Mann and Shie Mannor

Scaling Up Approximate Value Iteration with Options: Better Policies with Fewer Iterations (pdf)

We show how options, a class of control structures encompassing primitive and temporally extended actions, can play a valuable role in planning in MDPs with continuous state-spaces. Analyzing the convergence rate of Approximate Value Iteration with options reveals that for pessimistic initial value function estimates, options can speed up convergence compared to planning with only primitive actions even when the temporally extended actions are suboptimal and sparsely scattered throughout the state-space. Our experimental results in an optimal replacement task and a complex inventory management task demonstrate the potential for options to speed up convergence in practice. We show that options induce faster convergence to the optimal value function, which implies deriving better policies with fewer iterations.

-

Jian Tang and Zhaoshi Meng and Xuanlong Nguyen and Qiaozhu Mei and Ming Zhang

Understanding the Limiting Factors of Topic Modeling via Posterior Contraction Analysis (pdf)

Topic models such as the latent Dirichlet allocation (LDA) have become a standard staple in the modeling toolbox of machine learning. They have been applied to a vast variety of data sets, contexts, and tasks to varying degrees of success. However, to date there is almost no formal theory explicating the LDA's behavior, and despite its familiarity there is very little systematic analysis of and guidance on the properties of the data that affect the inferential performance of the model. This paper seeks to address this gap, by providing a systematic analysis of factors which characterize the LDA's performance. We present theorems elucidating the posterior contraction rates of the topics as the amount of data increases, and a thorough supporting empirical study using synthetic and real data sets, including news and web-based articles and tweet messages. Based on these results we provide practical guidance on how to identify suitable data sets for topic models, and how to specify particular model parameters.

-

Akshay Krishnamurthy and Kirthevasan Kandasamy and Barnabas Poczos and Larry Wasserman

Nonparametric Estimation of Renyi Divergence and Friends (pdf)

We consider nonparametric estimation of $L_2$, Renyi-$\alpha$ and Tsallis-$\alpha$ divergences between continuous distributions. Our approach is to construct estimators for particular integral functionals of two densities and translate them into divergence estimators. For the integral functionals, our estimators are based on corrections of a preliminary plug-in estimator. We show that these estimators achieve the parametric convergence rate of $n^{-1/2