active learning

-

Filipe Rodrigues and Francisco Pereira and Bernardete Ribeiro

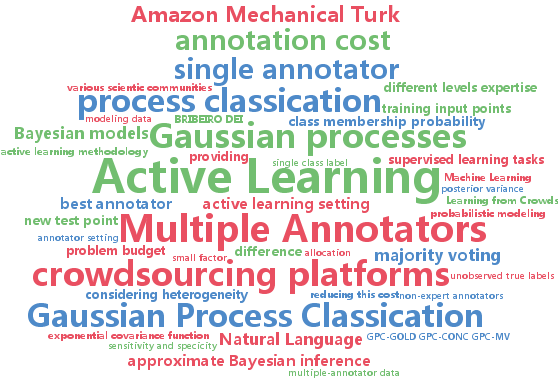

Gaussian Process Classification and Active Learning with Multiple Annotators (pdf)

Learning from multiple annotators took a valuable step towards modelling data that does not fit the usual single annotator setting. However, multiple annotators sometimes offer varying degrees of expertise. When disagreements arise, the establishment of the correct label through trivial solutions such as majority voting may not be adequate, since without considering heterogeneity in the annotators, we risk generating a flawed model. In this paper, we extend GP classification in order to account for multiple annotators with different levels expertise. By explicitly handling uncertainty, Gaussian processes (GPs) provide a natural framework to build proper multiple-annotator models. We empirically show that our model significantly outperforms other commonly used approaches, such as majority voting, without a significant increase in the computational cost of approximate Bayesian inference. Furthermore, an active learning methodology is proposed, which is able to reduce annotation cost even further.

-

Lijun Zhang and Jinfeng Yi and Rong Jin

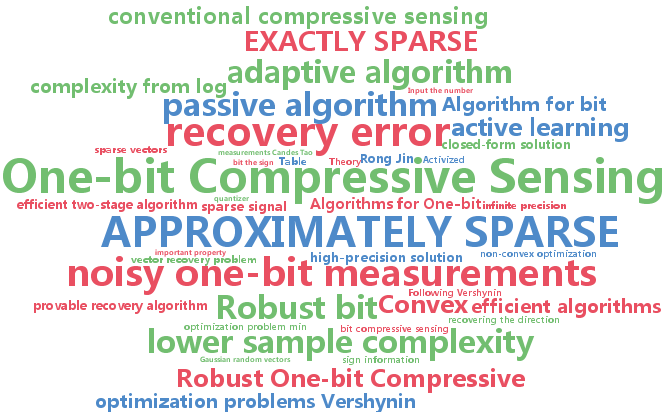

Efficient Algorithms for Robust One-bit Compressive Sensing (pdf)

While the conventional compressive sensing assumes measurements of infinite precision, one-bit compressive sensing considers an extreme setting where each measurement is quantized to just a single bit. In this paper, we study the vector recovery problem from noisy one-bit measurements, and develop two novel algorithms with formal theoretical guarantees. First, we propose a passive algorithm, which is very efficient in the sense it only needs to solve a convex optimization problem that has a closed-form solution. Despite the apparent simplicity, our theoretical analysis reveals that the proposed algorithm can recover both the exactly sparse and the approximately sparse vectors. In particular, for a sparse vector with $s$ nonzero elements, the sample complexity is $O(s \log n/\epsilon^2)$, where $n$ is the dimensionality and $\epsilon$ is the recovery error. This result improves significantly over the previously best known sample complexity in the noisy setting, which is $O(s \log n/\epsilon^4)$. Second, in the case that the noise model is known, we develop an adaptive algorithm based on the principle of active learning. The key idea is to solicit the sign information only when it cannot be inferred from the current estimator. Compared with the passive algorithm, the adaptive one has a lower sample complexity if a high-precision solution is desired.

-

Yuxin Chen and Hiroaki Shioi and Cesar Fuentes Montesinos and Lian Pin Koh and Serge Wich and Andreas Krause

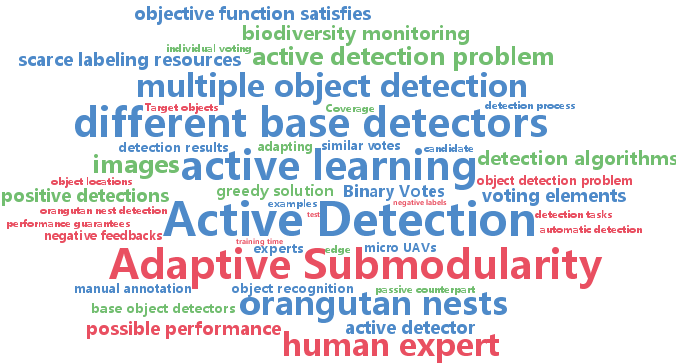

Active Detection via Adaptive Submodularity (pdf)

Efficient detection of multiple object instances is one of the fundamental challenges in computer vision. For certain object categories, even the best automatic systems are yet unable to produce high-quality detection results, and fully manual annotation would be an expensive process. How can detection algorithms interplay with human expert annotators? To make the best use of scarce (human) labeling resources, one needs to decide when to invoke the expert, such that the best possible performance can be achieved while requiring a minimum amount of supervision. In this paper, we propose a principled approach to active object detection, and show that for a rich class of base detectors algorithms, one can derive a natural sequential decision problem for deciding when to invoke expert supervision. We further show that the objective function satisfies adaptive submodularity, which allows us to derive strong performance guarantees for our algorithm. We demonstrate the proposed algorithm on three real-world tasks, including a problem for biodiversity monitoring from micro UAVs in the Sumatra rain forest. Our results show that active detection not only outperforms its passive counterpart; for certain tasks, it also works significantly better than straightforward application of existing active learning techniques. To the best of our knowledge, our approach is the first to rigorously address the active detection problem from both empirical and theoretical perspectives.

-

Bruno Da Silva and George Konidaris and Andrew Barto

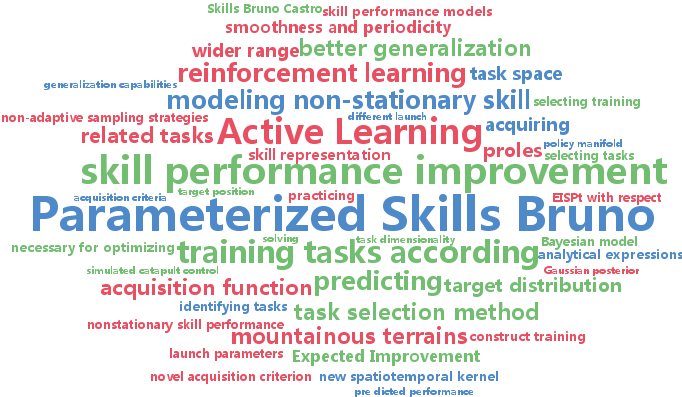

Active Learning of Parameterized Skills (pdf)

We introduce a method for actively learning parameterized skills. Parameterized skills are flexible behaviors that can solve any task drawn from a distribution of parameterized reinforcement learning problems. Approaches to learning such skills have been proposed, but limited attention has been given to identifying which training tasks allow for rapid skill acquisition. We construct a non-parametric Bayesian model of skill performance and derive analytical expressions for a novel acquisition criterion capable of identifying tasks that maximize expected improvement in skill performance. We also introduce a spatiotemporal kernel tailored for non-stationary skill performance models. The proposed method is agnostic to policy and skill representation and scales independently of task dimensionality. We evaluate it on a non-linear simulated catapult control problem over arbitrarily mountainous terrains.

-

Jose Miguel Hernandez-Lobato and Neil Houlsby and Zoubin Ghahramani

Stochastic Inference for Scalable Probabilistic Modeling of Binary Matrices (pdf)

Fully observed large binary matrices appear in a wide variety of contexts. To model them, probabilistic matrix factorization (PMF) methods are an attractive solution. However, current batch algorithms for PMF can be inefficient because they need to analyze the entire data matrix before producing any parameter updates. We derive an efficient stochastic inference algorithm for PMF models of fully observed binary matrices. Our method exhibits faster convergence rates than more expensive batch approaches and has better predictive performance than scalable alternatives. The proposed method includes new data subsampling strategies which produce large gains over standard uniform subsampling. We also address the task of automatically selecting the size of the minibatches of data used by our method. For this, we derive an algorithm that adjusts this hyper-parameter online.

-

Daniel Hsu and Sivan Sabato

Heavy-tailed regression with a generalized median-of-means (pdf)

This work proposes a simple and computationally efficient estimator for linear regression, and other smooth and strongly convex loss minimization problems. We prove loss approximation guarantees that hold for general distributions, including those with heavy tails. All prior results only hold for estimators which either assume bounded or subgaussian distributions, require prior knowledge of distributional properties, or are not known to be computationally tractable. In the special case of linear regression with possibly heavy-tailed responses and with bounded and well-conditioned covariates in $d$-dimensions, we show that a random sample of size $\tilde{O