robustness

-

Joachim Giesen and Soeren Laue and Patrick Wieschollek

Robust and Efficient Kernel Hyperparameter Paths with Guarantees (pdf)

Algorithmically, many machine learning tasks boil down to solving parameterized optimization problems. Finding good values for the parameters has significant influence on the statistical performance of these methods. Thus supporting the choice of parameter values algorithmically has received quite some attention recently, especially algorithms for computing the whole solution path of parameterized optimization problem. These algorithms can be used, for instance, to track the solution of a regularized learning problem along the regularization parameter path, or for tracking the solution of kernelized problems along a kernel hyperparameter path. Since exact path following algorithms can be numerically unstable, robust and efficient approximate path tracking algorithms became popular for regularized learning problems. By now algorithms with optimal path complexity are known for many regularized learning problems. That is not the case for kernel hyperparameter path tracking algorithms, where the exact path tracking algorithms can also suffer from numerical instabilities. The robust approximation algorithms for regularization path tracking can not be used directly for kernel hyperparameter path tracking problems since the latter fall into a different problem class. Here we address this problem by devising a robust and efficient path tracking algorithm that can also handle kernel hyperparameter paths and has asymptotically optimal complexity. We use this algorithm to compute approximate kernel hyperparamter solution paths for support vector machines and robust kernel regression. Experimental results for this problem applied to various data sets confirms the theoretical complexity analysis.

-

Shike Mei and Jun Zhu and Jerry Zhu

Robust RegBayes: Selectively Incorporating First-Order Logic Domain Knowledge into Bayesian Models (pdf)

Much research in Bayesian modeling has been done to elicit a prior distribution that incorporates domain knowledge. We present a novel and more direct approach by imposing First-Order Logic (FOL) rules on the posterior distribution. Our approach unifies FOL and Bayesian modeling under the regularized Bayesian framework. In addition, our approach automatically estimates the uncertainty of FOL rules when they are produced by humans, so that reliable rules are incorporated while unreliable ones are ignored. We apply our approach to latent topic modeling tasks and demonstrate that by combining FOL knowledge and Bayesian modeling, we both improve the task performance and discover more structured latent representations in unsupervised and supervised learning.

-

Panagiotis Toulis and Edoardo Airoldi and Jason Rennie

Statistical analysis of stochastic gradient methods for generalized linear models (pdf)

We study the statistical properties of stochastic gradient descent (SGD) using explicit and implicit updates for fitting generalized linear models (GLMs). Initially, we develop a computationally efficient algorithm to implement implicit SGD learning of GLMs. Next, we obtain exact formulas for the bias and variance of both updates which leads to two important observations on their comparative statistical properties. First, in small samples, the estimates from the implicit procedure are more biased than the estimates from the explicit one, but their empirical variance is smaller and they are more robust to learning rate misspecification. Second, the two procedures are statistically identical in the limit: they are both unbiased, converge at the same rate and have the same asymptotic variance. Our set of experiments confirm our theory and more broadly suggest that the implicit procedure can be a competitive choice for fitting large-scale models, especially when robustness is a concern.

-

Feiping Nie and Jianjun Yuan and Heng Huang

Optimal Mean Robust Principal Component Analysis (pdf)

Dimensionality reduction techniques extract low-dimensional structure from high-dimensional data and are widespread in machine learning research. In practice, due to lacking labeled data, the unsupervised dimensionality reduction algorithms are more desired. Among them, Principal Component Analysis (PCA) is the most widely used approach. In recent research, several robust PCA algorithms were presented to enhance the robustness of PCA model. However, all existing robust PCA methods incorrectly center the data using the L2-norm distance to calculate the mean, which actually is not the optimal mean due to the L1-norm used in the objective functions. It is non-trivial to remove the optimal mean in the robust PCA, because of the sparsity-inducing norms used in the robust formulations. In this paper, we propose novel robust PCA objective functions with removing optimal mean automatically. We naturally integrate the mean calculation into the dimensionality reduction optimization, such that the optimal mean can be obtained to enhance the dimensionality reduction. Both theoretical analysis and empirical studies demonstrate our new methods can more effectively reduce data dimensionality than previous robust PCA methods.

-

Jose Miguel Hernandez-Lobato and Neil Houlsby and Zoubin Ghahramani

Probabilistic Matrix Factorization with Non-random Missing Data (pdf)

We propose a probabilistic matrix factorization model for collaborative filtering that learns from data that is missing not at random(MNAR). Matrix factorization models exhibit state-of-the-art predictive performance in collaborative filtering. However, these models usually assume that the data is missing at random (MAR), and this is rarely the case. For example, the data is not MAR if users rate items they like more than ones they dislike. When the MAR assumption is incorrect, inferences are biased and predictive performance can suffer. Therefore, we model both the generative process for the data and the missing data mechanism. By learning these two models jointly we obtain improved performance over state-of-the-art methods when predicting the ratings and when modeling the data observation process. We present the first viable MF model for MNAR data. Our results are promising and we expect that further research on NMAR models will yield large gains in collaborative filtering.

-

Stanislav Minsker and Sanvesh Srivastava and Lizhen Lin and David Dunson

Scalable and Robust Bayesian Inference via the Median Posterior (pdf)

Many Bayesian learning methods for massive data benefit from working with small subsets of observations. In particular, significant progress has been made in scalable Bayesian learning via stochastic approximation. However, Bayesian learning methods in distributed computing environments are often problem- or distribution-specific and use ad hoc techniques. We propose a novel general approach to Bayesian inference that is scalable and robust to corruption in the data. Our technique is based on the idea of splitting the data into several non-overlapping subgroups, evaluating the posterior distribution given each independent subgroup, and then combining the results. The main novelty is the proposed aggregation step which is based on finding the geometric median of posterior distributions. We present both theoretical and numerical results illustrating the advantages of our approach.

-

Qian Zhao and Deyu Meng and Zongben Xu and Wangmeng Zuo and Lei Zhang

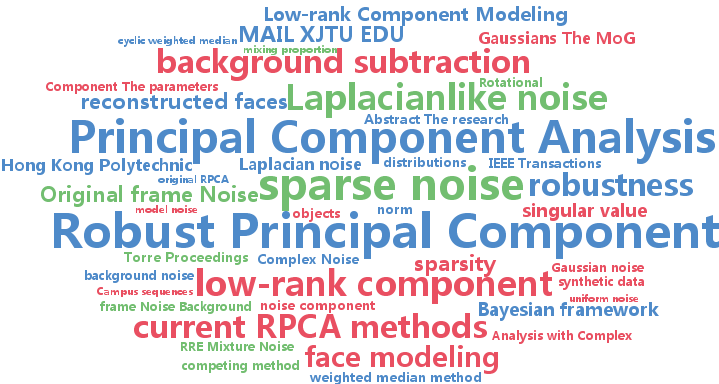

Robust Principal Component Analysis with Complex Noise (pdf)

The research on robust principal component analysis (RPCA) has been attracting much attention recently. The original RPCA model assumes sparse noise, and use the $L_1$-norm to characterize the error term. In practice, however, the noise is much more complex and it is not appropriate to simply use a certain $L_p$-norm for noise modeling. We propose a generative RPCA model under the Bayesian framework by modeling data noise as a mixture of Gaussians (MoG). The MoG is a universal approximator to continuous distributions and thus our model is able to fit a wide range of noises such as Laplacian, Gaussian, sparse noises and any combinations of them. A variational Bayes algorithm is presented to infer the posterior of the proposed model. All involved parameters can be recursively updated in closed form. The advantage of our method is demonstrated by extensive experiments on synthetic data, face modeling and background subtraction.