principal component analysis

-

David Lopez-Paz and Suvrit Sra and Alex Smola and Zoubin Ghahramani and Bernhard Schoelkopf

Randomized Nonlinear Component Analysis (pdf)

Classical methods such as Principal Component Analysis (PCA) and Canonical Correlation Analysis (CCA) are ubiquitous in statistics. However, these techniques are only able to reveal linear relationships in data. Although nonlinear variants of PCA and CCA have been proposed, these are computationally prohibitive in the large scale. In a separate strand of recent research, randomized methods have been proposed to construct features that help reveal nonlinear patterns in data. For basic tasks such as regression or classification, random features exhibit little or no loss in performance, while achieving drastic savings in computational requirements. In this paper we leverage randomness to design scalable new variants of nonlinear PCA and CCA; our ideas extend to key multivariate analysis tools such as spectral clustering or LDA. We demonstrate our algorithms through experiments on real-world data, on which we compare against the state-of-the-art. A simple R implementation of the presented algorithms is provided.

-

Hua Wang and Feiping Nie and Heng Huang

Robust Distance Metric Learning via Simultaneous L1-Norm Minimization and Maximization (pdf)

Traditional distance metric learning with side information usually formulates the objectives using the covariance matrices of the data point pairs in the two constraint sets of must-links and cannot-links. Because the covariance matrix computes the sum of the squared L2-norm distances, it is prone to both outlier samples and outlier features. To develop a robust distance metric learning method, in this paper we propose a new objective for distance metric learning using the L1-norm distances. However, the resulted objective is very challenging to solve, because it simultaneously minimizes and maximizes (minmax) a number of non-smooth L1-norm terms. As an important theoretical contribution of this paper, we systematically derive an efficient iterative algorithm to solve the general L1-norm minmax problem, which is rarely studied in literature. We have performed extensive empirical evaluations, where our new distance metric learning method outperforms related state-of-the-art methods in a variety of experimental settings to cluster both noiseless and noisy data.

-

Feiping Nie and Jianjun Yuan and Heng Huang

Optimal Mean Robust Principal Component Analysis (pdf)

Dimensionality reduction techniques extract low-dimensional structure from high-dimensional data and are widespread in machine learning research. In practice, due to lacking labeled data, the unsupervised dimensionality reduction algorithms are more desired. Among them, Principal Component Analysis (PCA) is the most widely used approach. In recent research, several robust PCA algorithms were presented to enhance the robustness of PCA model. However, all existing robust PCA methods incorrectly center the data using the L2-norm distance to calculate the mean, which actually is not the optimal mean due to the L1-norm used in the objective functions. It is non-trivial to remove the optimal mean in the robust PCA, because of the sparsity-inducing norms used in the robust formulations. In this paper, we propose novel robust PCA objective functions with removing optimal mean automatically. We naturally integrate the mean calculation into the dimensionality reduction optimization, such that the optimal mean can be obtained to enhance the dimensionality reduction. Both theoretical analysis and empirical studies demonstrate our new methods can more effectively reduce data dimensionality than previous robust PCA methods.

-

Farhad Pourkamali Anaraki and Shannon Hughes

Memory and Computation Efficient PCA via Very Sparse Random Projections (pdf)

Algorithms that can efficiently recover principal components in very high-dimensional, streaming, and/or distributed data settings have become an important topic in the literature. In this paper, we propose an approach to principal component estimation that utilizes projections onto very sparse random vectors with Bernoulli-generated nonzero entries. Indeed, our approach is simultaneously efficient in memory/storage space, efficient in computation, and produces accurate PC estimates, while also allowing for rigorous theoretical performance analysis. Moreover, one can tune the sparsity of the random vectors deliberately to achieve a desired point on the tradeoffs between memory, computation, and accuracy. We rigorously characterize these tradeoffs and provide statistical performance guarantees. In addition to these very sparse random vectors, our analysis also applies to more general random projections. We present experimental results demonstrating that this approach allows for simultaneously achieving a substantial reduction of the computational complexity and memory/storage space, with little loss in accuracy, particularly for very high-dimensional data.

-

Risi Kondor and Nedelina Teneva and Vikas Garg

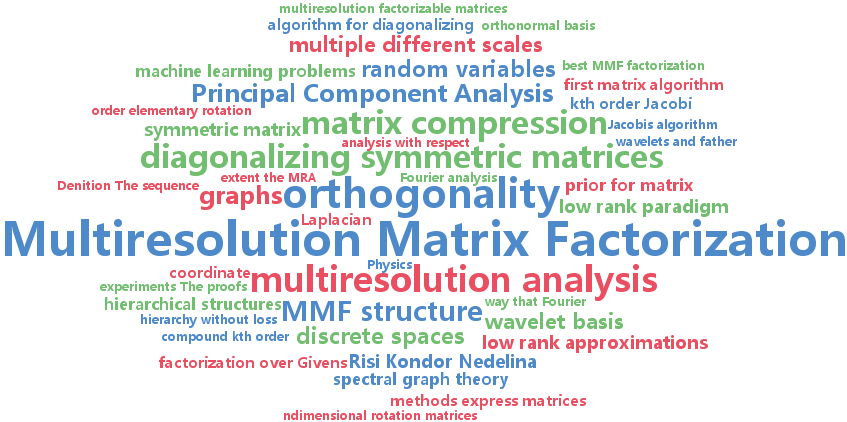

Multiresolution Matrix Factorization (pdf)

The types of large matrices that appear in modern Machine Learning problems often have complex hierarchical structures that go beyond what can be found by traditional linear algebra tools, such as eigendecompositions. Inspired by ideas from multiresolution analysis, this paper introduces a new notion of matrix factorization that can capture structure in matrices at multiple different scales. The resulting Multiresolution Matrix Factorizations (MMFs) not only provide a wavelet basis for sparse approximation, but can also be used for matrix compression (similar to Nystrom approximations) and as a prior for matrix completion.

-

Uri Shalit and Gal Chechik

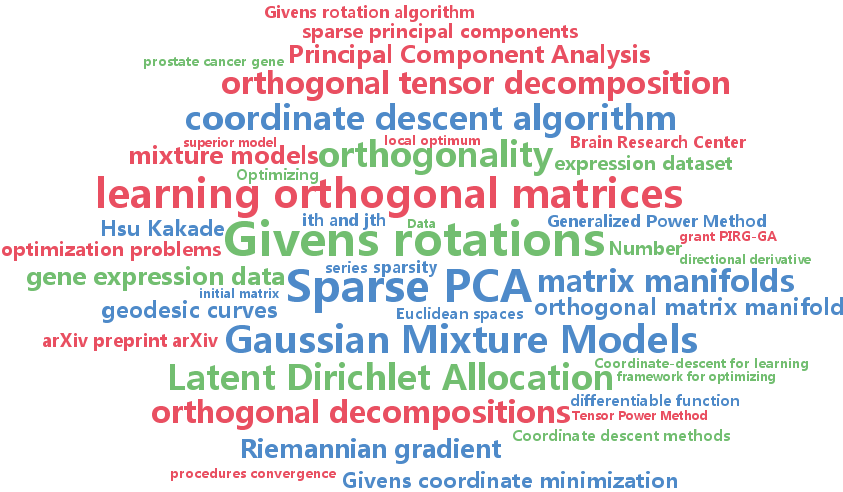

Coordinate-descent for learning orthogonal matrices through Givens rotations (pdf)

Optimizing over the set of orthogonal matrices is a central component in problems like sparse-PCA or tensor decomposition. Unfortunately, such optimization is hard since simple operations on orthogonal matrices easily break orthogonality, and correcting orthogonality usually costs a large amount of computation. Here we propose a framework for optimizing orthogonal matrices, that is the parallel of coordinate-descent in Euclidean spaces. It is based on {\em Givens-rotations

-

Qian Zhao and Deyu Meng and Zongben Xu and Wangmeng Zuo and Lei Zhang

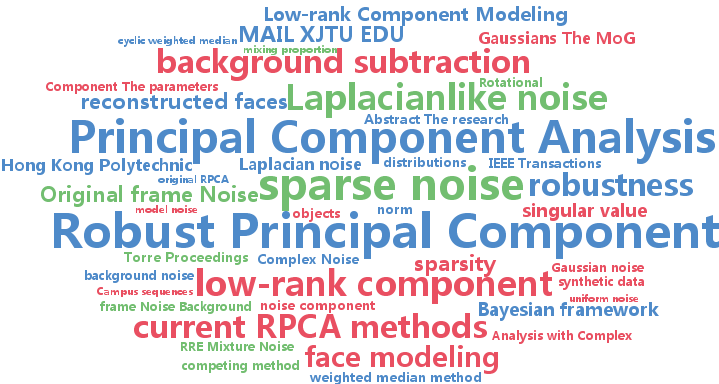

Robust Principal Component Analysis with Complex Noise (pdf)

The research on robust principal component analysis (RPCA) has been attracting much attention recently. The original RPCA model assumes sparse noise, and use the $L_1$-norm to characterize the error term. In practice, however, the noise is much more complex and it is not appropriate to simply use a certain $L_p$-norm for noise modeling. We propose a generative RPCA model under the Bayesian framework by modeling data noise as a mixture of Gaussians (MoG). The MoG is a universal approximator to continuous distributions and thus our model is able to fit a wide range of noises such as Laplacian, Gaussian, sparse noises and any combinations of them. A variational Bayes algorithm is presented to infer the posterior of the proposed model. All involved parameters can be recursively updated in closed form. The advantage of our method is demonstrated by extensive experiments on synthetic data, face modeling and background subtraction.